Recently a 6km2 block of ESRI ASCII Grid 5m demo data has been offered for testing as benchmark for the performance of TheoContour the ‘no frills’ contouring in AutoCAD tool. I’m not sure of the origin of the data but it looks a lot like Lidar and certainly this set gives a good indication as to how TheoContour could handle a 5m post spaced Lidar swath if needed.

The point data is lovely but its dosen’t read like a map and even as a surfaced model it’s not really too useful for mapping so getting countours out of the points is a good first step to getting a map out of it. TheoContour is a great way of making that first step from data to map (getting from points to lines is something of a 1st step in most surveying processes these days!) but the sheer density of the data means a quite bit of care is needed when contouring such a big swath.

The point data is lovely but its dosen’t read like a map and even as a surfaced model it’s not really too useful for mapping so getting countours out of the points is a good first step to getting a map out of it. TheoContour is a great way of making that first step from data to map (getting from points to lines is something of a 1st step in most surveying processes these days!) but the sheer density of the data means a quite bit of care is needed when contouring such a big swath.

TheoContour has some slighltly tricky settings and the regular nature of the Lidar data gave me a good oportunity to get some comparative results with the sampling and sub-sampling controls.

AutoCAD adressable memory limit: First of all its worth noting that in processing a surface conataining all 237,765 points is a bit like asking TheoContour to hold up the whole sky! It will hit the adressable memory limit in AutoCAD at some point in the calucation process, more than likely this will be at the contour processing end as this is when there is no escape from asking AutoCAD to do a lot of work in plotting the nodes on the contour lines and joining them up. In most cases TheoContour does pretty well at collating the point data so when getting to grips with a big job like this its the contour outputs that test the memory handling in AutoCAD.

First off TheoContour needs to collate the points, this took about 75s and the command line ‘thermometer’ gives you a clue as to what’s going on: running the collate command gives a command line report on completion:

Select points to include in the contour model.

Select objects: ALL

237765 found

Select objects:

Selecting and checking points.

Building Surface.

Checking Surface

Min point 269639.5000, 737484.5000, 105.0000

Max point 272659.5000, 739444.5000, 609.4700

Processed 237769 points,

Boundary Contains 4 points,

Created 475532 surfaces

Average Surface Size: 9.63

Min Surface Size: 5.69

Max Surface Size: 2040.63

This gives us a clue as how to handle this as contours. So the next step is to contour? not quite; with a big processing order like this there will be a limit to what can be done so the choice of contour interval, the smoothing step and processing strategy becomes VERY important at this point! Ignore the need for a suitable interval at your peril:

Choosing data density over processing capacity: It helps to think of contour generation as ‘node building’ . The height range is 504.47m from heighest to lowest point ; if we are to contour at a 1m interval we are aking for 503 contour lines, each one ‘joining up’ a rough maximum of 5 points per metre along its length and then interpolating nodes at the changes of direction of the isoline. So an estimate of the required nodes per contour lne might be based on the longest line (say the length of the perimeter in the worst case) of 10,000m x 5 =50,000 nodes per polyline x 500 polylines =25 million nodes. For AutoCAD to plot and join 25 million nodes may be possible but my system is best described as ‘average’ (i.e the mininium I can afford for an AutoCAD 20011 platform) so I think working on the basis of a 1m contour interval for the whole block at once is not viable.

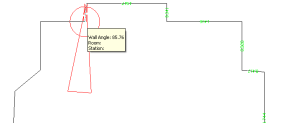

A 5m interval would generate 100 contours, an 80% reduction in the amount of processing over 1m. Working with a 5m interval is going to be slow so to test the sampling settings I use a 10m interval. Straight ploylines (splines are very nice but AutoCAD will be pushed hard to generate the smoothing!) with no duplicate point checking further reduces the proessing overheads. Because of the gridded nature of the point data there is a bais to a ‘gridded’ or ‘stepped’ contour model:

By increasing the sub-sampling rate step by step the ‘stepping’ effect of the contours begins to be reduced. Each increase in the sub-sampling rate increases the processing time so there is a point where the sample rate needs to be reduced once a good sub-sampling result is achieved: the goal is to commit to the minimum amount of processing to get the smoothest line. The sub-sampling has the biggest effect on the stepping but aslo the biggest effect on the processing effort.

At 10/17 the contours have lost the stepping and even as straight polylines look like the terrain they depict.

With the sampling settings taking up the smoothing the straight polylines work well. The nature of contours is such that the nesting of curves, the pinching of incised features and the moiree effect of lines on convex and concave slopes lead the eye to read the surface as a tactile object. TheoContour generates the lines as polylines so the editing of the lines is not too difficult. Introducing an appropriate lineweight for the index contour and some sensible colours by layer gets the model behaving cartographically:

With patience a 2m interval is possible:

Of course the contours are true 3d enitites:

Download TheoContour for Bricscad here.

Dowload TheoContour (as part of the TheoLt Suite) for AutoCAD here.

This PCG file may be attached in the same way as any other AutoCAD block or image file.

This PCG file may be attached in the same way as any other AutoCAD block or image file.